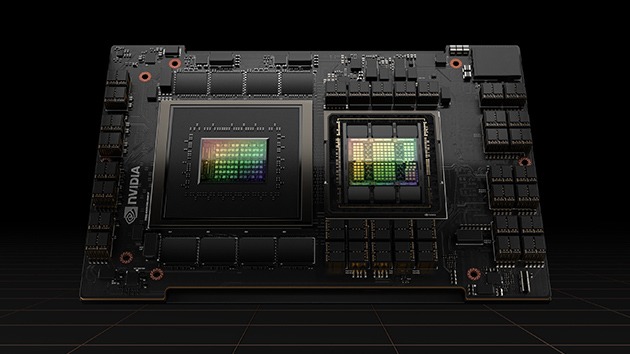

H100 suitable for mainstream servers With five -year NVIDIA AI ENTERPRISE software kit subscription (including corporate support), it can be simplified with strong performance AI adoption. This ensures that the organization can access the AI required for the construction of an AI workflow that built H100 acceleration Frames and tools, such as AI chat robots, recommendation engines and visual AI.

NVIDIA H100 Tensor Core GPU

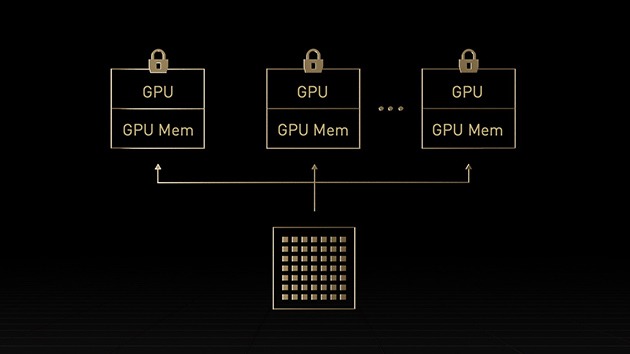

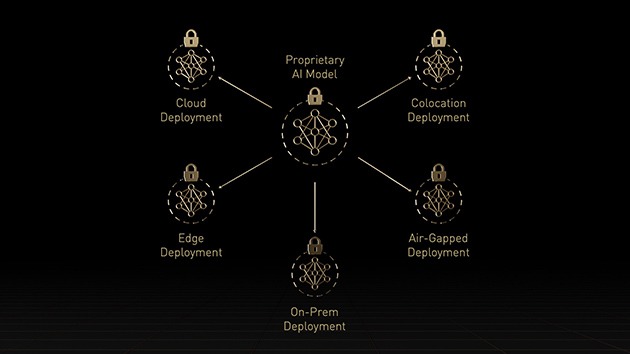

Provide excellent performance, scalability and security for various data centers.